One of the major challenges facing air quality scientists and citizen scientists is the dearth of information available regarding the performance of low-cost gas sensors. Though manufacturers provide data sheets that describe the performance of their wares, these data sheets are usually incomplete - lacking the information required to evaluate whether the gas sensor is suitable for mobile ambient air quality monitoring. Since the data sheets don’t provide the information we need, we decided to develop our own lab for evaluating the performance of low-cost gas sensors.

In support of New York Hall of Science's Collect, Construct, Change (C3) youth environmental science program and in partnership with the New York City College of Technology, we evaluated the performance of three gas sensors: the Figaro TGS-2442 and the Alphasense CO-B4 carbon monoxide (CO) sensors and the SGX MiCS-2710 nitrogen dioxide (NO2) sensor. It’s important to note that in order to test the sensors, we first had to develop instruments for acquiring and interpreting their signal outputs. It's possible that our instruments, the AirCasting Air Monitor and the AirGo, introduced errors that have not been accounted for. Therefore our results should not be considered the final word on the performance of these sensors. It should also be noted that the sensors we tested have warm up times approaching one hour. This compromises their utility for mobile air quality monitoring, where the ability to flip on an instrument and immediately begin recording measurements is an important feature.

With an equipment budget of 5k we knew from the outset that our lab set-up would be bare bones. We had just enough money to purchase cylinders of “zero air”, NO2, and CO, a manually adjustable gas blender for mixing different concentrations of our target gases, and materials for constructing a DIY exposure chamber. We didn’t have sufficient resources to purchase reference analyzers to confirm our gas blending targets, scrub our zero air of CO, control for temperature and humidity, or test for response to interfering gases. The complete bill of materials for our lab can be downloaded here, including some preliminary sketches of the set-up authored by Charles Eckman, Lab Manager at the NorLab Division of Norco, Inc, along with some additional photos of our actual set-up.

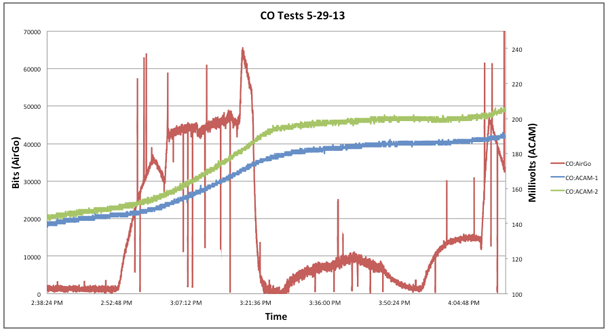

One thing we learned quickly was that the design for our initial exposure chamber, which was approximately two liters, was too large given the low flow rates that could be achieved by our gas blender. Although the volume of our initial chamber prevented us from being able to establish an equilibrium gas concentration, it could accommodate multiple instruments, making it suitable for running side-by-side comparisons. The above graph shows the results of one of these test runs, where we compared the performance of two TGS-2442s to one CO-B4, while changing the CO gas concentration inside the chamber. Note how the red line representing the CO-B4 response is moving up and down dramatically whereas the blue and green lines representing the two TGS-2442s rise slowly and then stabilize. Right off the bat we realized that the TGS-2442 was unsuitable for ambient air quality monitoring. It was extremely slow to respond to changes in CO concentrations and became saturated, rendering it incapable of responding when CO concentrations dropped.

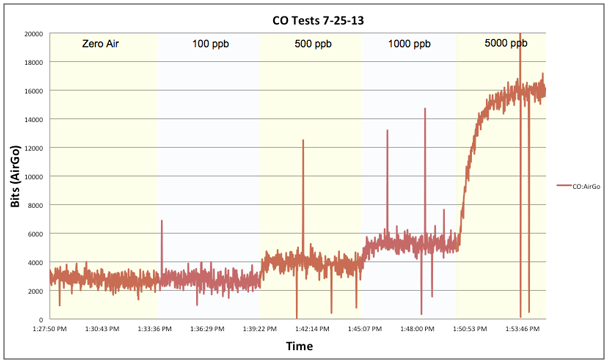

Upon discovering that our exposure chamber was too big, we crafted a smaller chamber that could fit only one instrument at a time. This made it impossible to run side-by-side comparisons but it did enable greater precision in generating gas concentrations. The above graph illustrates the results from one of our CO-B4 step tests. We we’re impressed with the CO-B4s performance. It had a fast response time, a suitable lower-detection limit, and when we compared test runs between sensors, manageable out of the box variability. As mentioned earlier, we didn’t have the resources to scrub our “zero air” of CO and this can be seen in our results, with little to no change in the response between “zero air” and 100 ppb. However, we know from tests conducted at the New York State Department of Environmental Conservation’s Queens College air monitoring station that the CO-B4 has a lower detection limit that may match the manufacturers claim of less than 5 ppb. At approximately $90 this electrochemical sensor isn’t cheap, but depending on your budget and intended use it’s favorable performance may be worth the extra expense.

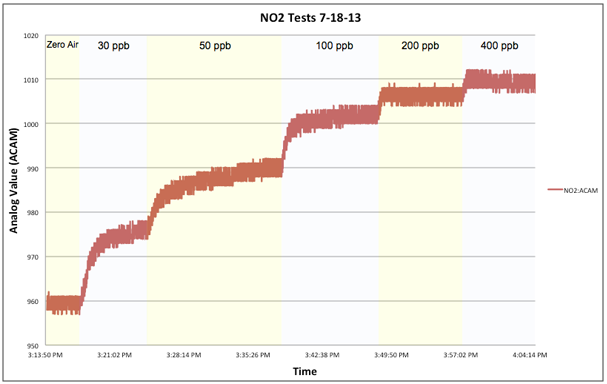

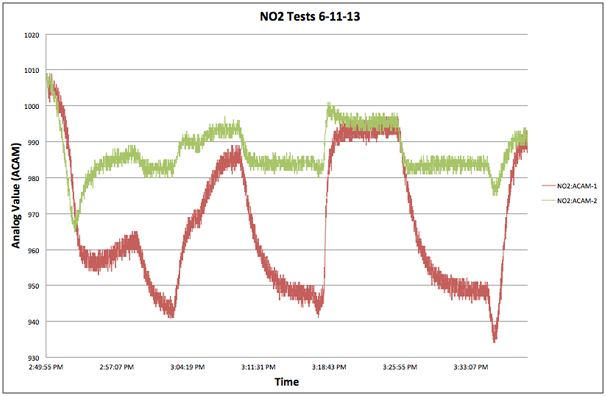

After seeing the disappointing results from our TGS-2442 tests we were surprised to find that the MiCS-2710, also a metal-oxide sensor, performed as well as it did, see above graph. It’s multi-minute response times weren’t nearly as rapid as the CO-B4, which responded in seconds, but they were adequate and tests conducted by the EPA on the AirCasting Air Monitor indicated a lower detection limit approaching 10 ppb! A fairly good result considering it’s extremely low cost, retailing for approximately $5. However, a word of caution is in order as comparison tests, see below graph, revealed high out of the box variability. With no two sensors responding identically, each unit would need to be individually calibrated. Also, the sensitivity of the MiCS-2710 diminished as the NO2 concentration climbed above 100 ppb.

Special thanks to Charlie Eckman who helped with our lab setup and protocol; Peter Spellane, Chair of the New York City College of Technology Chemistry Dept., who secured lab space for our experiments and served as an advisor; and Raymond Yap and Leroy Williams who ran all the performance tests. Funding for this project was provided by The Hive Digital Media Learning Fund in The New York Community Trust.

Get AirBeam

Get AirBeam